Mashooq Badar

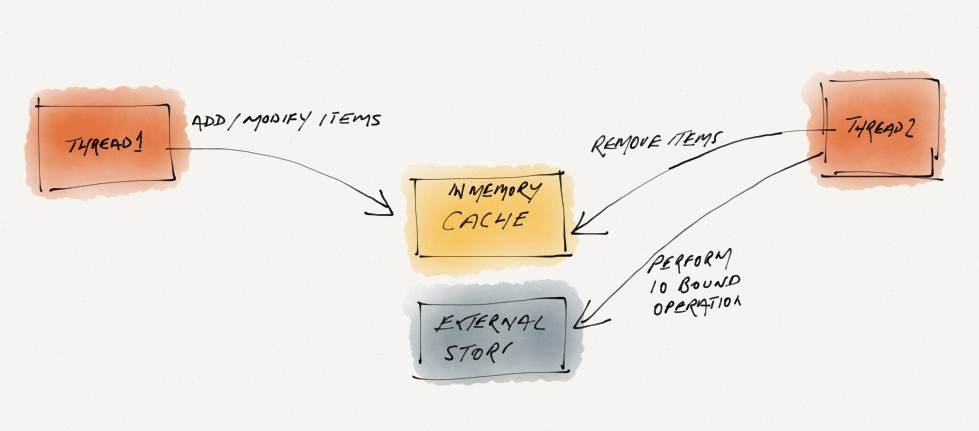

See author's bio and postsThe first rule of using locks for thread synchronisation is, "Do NOT use them!". Recently I saw an implementation that made heavy use of locks to synchronise access to a shared cache between two threads. The overall approach is explained in the diagram below:

Why not do the whole thing in a single thread? Well! the operations to the External Store are very time consuming and Thread-1 does not need to wait for them. So how do you solve this without using lock-based synchronisation?

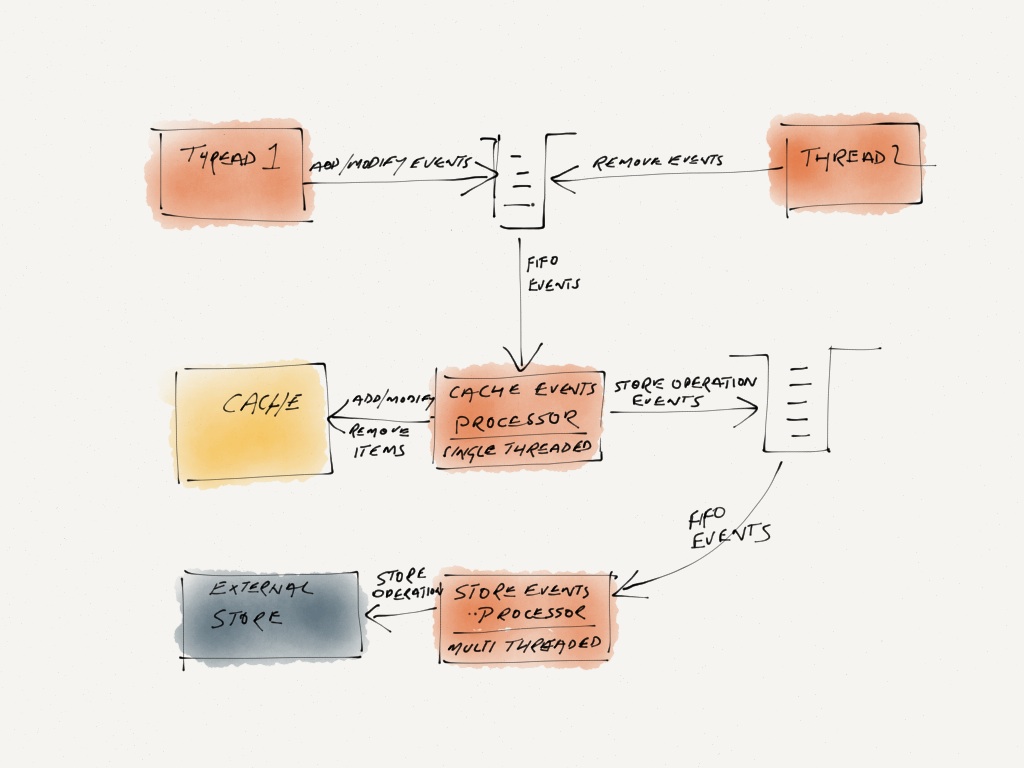

The operations to the cache are very quick and can be done in a single thread. These operations are coming from multiple threads. We can funnel them through a single thread by using a thread-safe queue as explained in the following diagram:

Although this solution looks more complicated, the key advantage is that no low-level thread synchronisation is needed. Most good programming languages already provide thread-safe queues. Also, you can scale up using a thread pool for the operations to the external store.

Note: in both of the above approaches we need to ensure that the cache does not grow indefinitely. In case of the queue based approach we can use a a queue that blocks after a maximum capacity is reached. In case of the lock based approach the cache itself will need to block.

Related Blogs

- By Felipe Fernández

- ·

- Posted 11 Jul 2016

State in scalable architectures

- By Sandro Mancuso

- ·

- Posted 23 Oct 2017

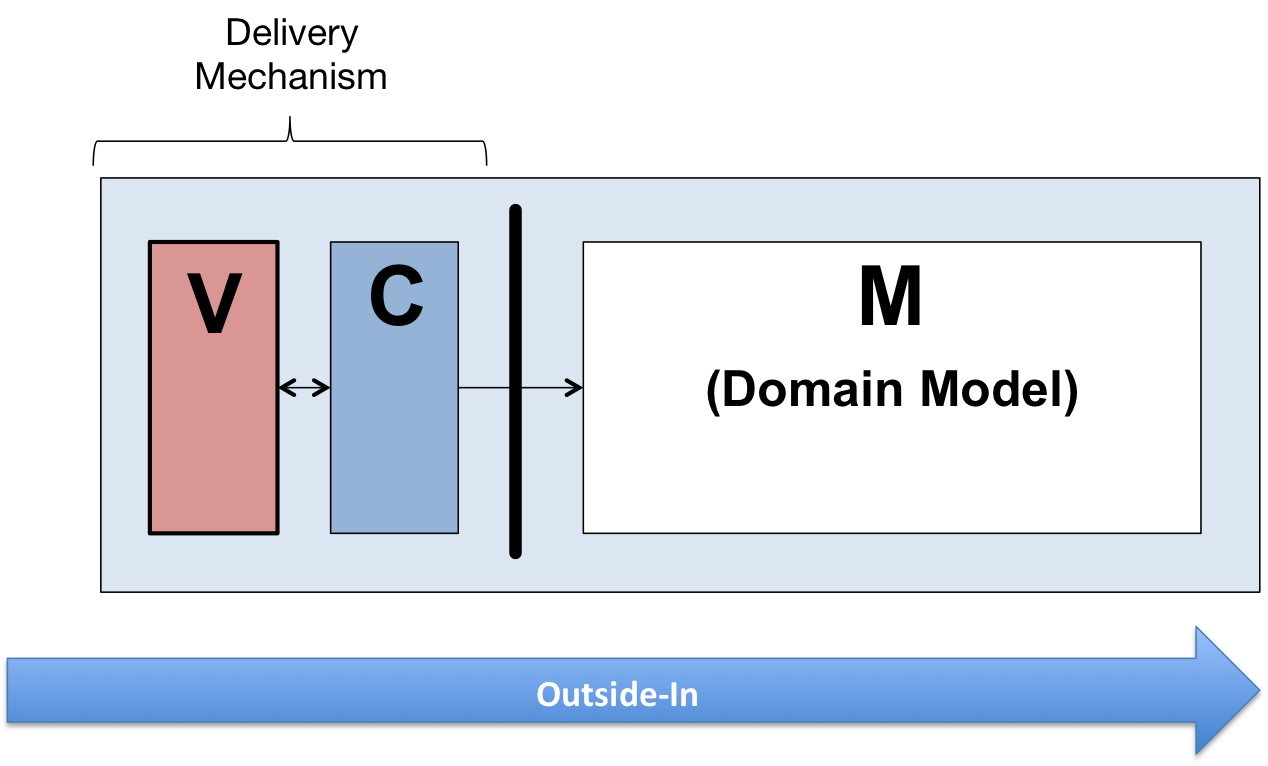

A Case for Outside-In Development

Get content like this straight to your inbox!

Software is our passion.

We are software craftspeople. We build well-crafted software for our clients, we help developers to get better at their craft through training, coaching and mentoring, and we help companies get better at delivering software.

Latest Blogs

Software Modernisation and Employee Retention...

Becoming PCI Compliant

Codurance signs the Microsoft Partner...

Useful Links

Contact Us

London, EC1M 5PU

Phone: +44 207 4902967

2 Mount Street

Manchester, M2 5WQ

Phone: +44 161 302 6795

Carrer de Pallars 99, 4th floor, room 41

Barcelona, 08018

Phone: +34 937 82 28 82

Email: hello@codurance.com