- By André Guelfi Torres

- ·

- Posted 16 Apr 2019

About one year ago I had my first contact with Docker. This new kid on the block promised to relieve our poor computers from installation of all tools, languages, dependencies and operating systems. Isolated run environments emerged on developers' computers.

While my ops teammates chose a more conservative approach, I started to use Docker with great joy. Despite many people describing Docker as a tool written by developers for development, our industry found new ways of using images and containers. Images of our applications and services became deployment units for tools like Kubernetes, Docker Swarm or Marathon.

But how are these images created?

From a developer's perspective, any application is manifested by its code, but there is still a long way to go before this code finds its way to a production environment. I want to show you how this process can be easier with Docker and a Continuous Deployment pipeline.

First of all we need a small application with a HTTP API that we can call after it's deployed. Let's assume that we are using Gradle to build the application and TeamCity as a Continuous Integration server.

We need to have Docker installed on each TeamCity build agent. We will also use this machine to run our application. In a real project we wouldn't install TeamCity agents on all machines. Instead we should use tools like Kubernetes that will take care of the application distribution.

As a first step in our Continuous Deployment pipeline, before we even think about Docker images, we need to build our application. In this step we will download the source code, run all tests and produce an artifact containing all elements required to start and run our application.

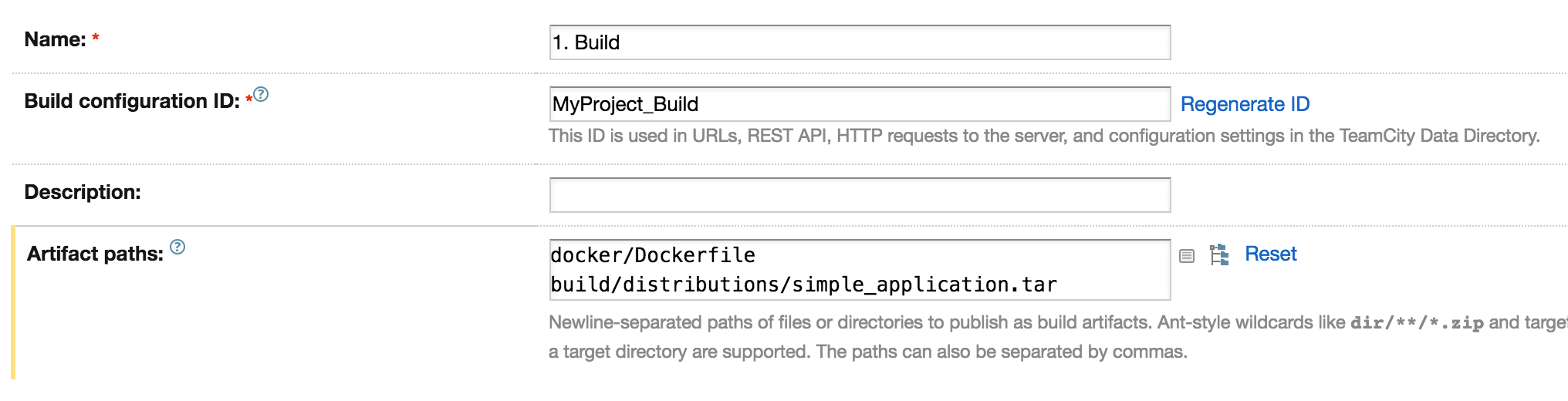

This build configuration is not very different to a step in a Continuous Deployment pipeline without Docker. Alongside common parameters we have to define artifacts, which will be generated after each build run. We are going to use them as a base for next steps in the pipeline.

In TeamCity we define artifacts by defining paths to files from the working directory (which is created for each run of a configuration). The working directory is a combination of the files downloaded from a version control system and the files generated during the execution of build steps. The working directory elements are defined in the version control settings and build steps.

We need two artifacts. The first one is Dockerfile. We already prepared this file in our source code and it will be stored in the docker directory. The next file is a tar file which will be generated by our Gradle build. It contains a script that executes our code and all required libraries.

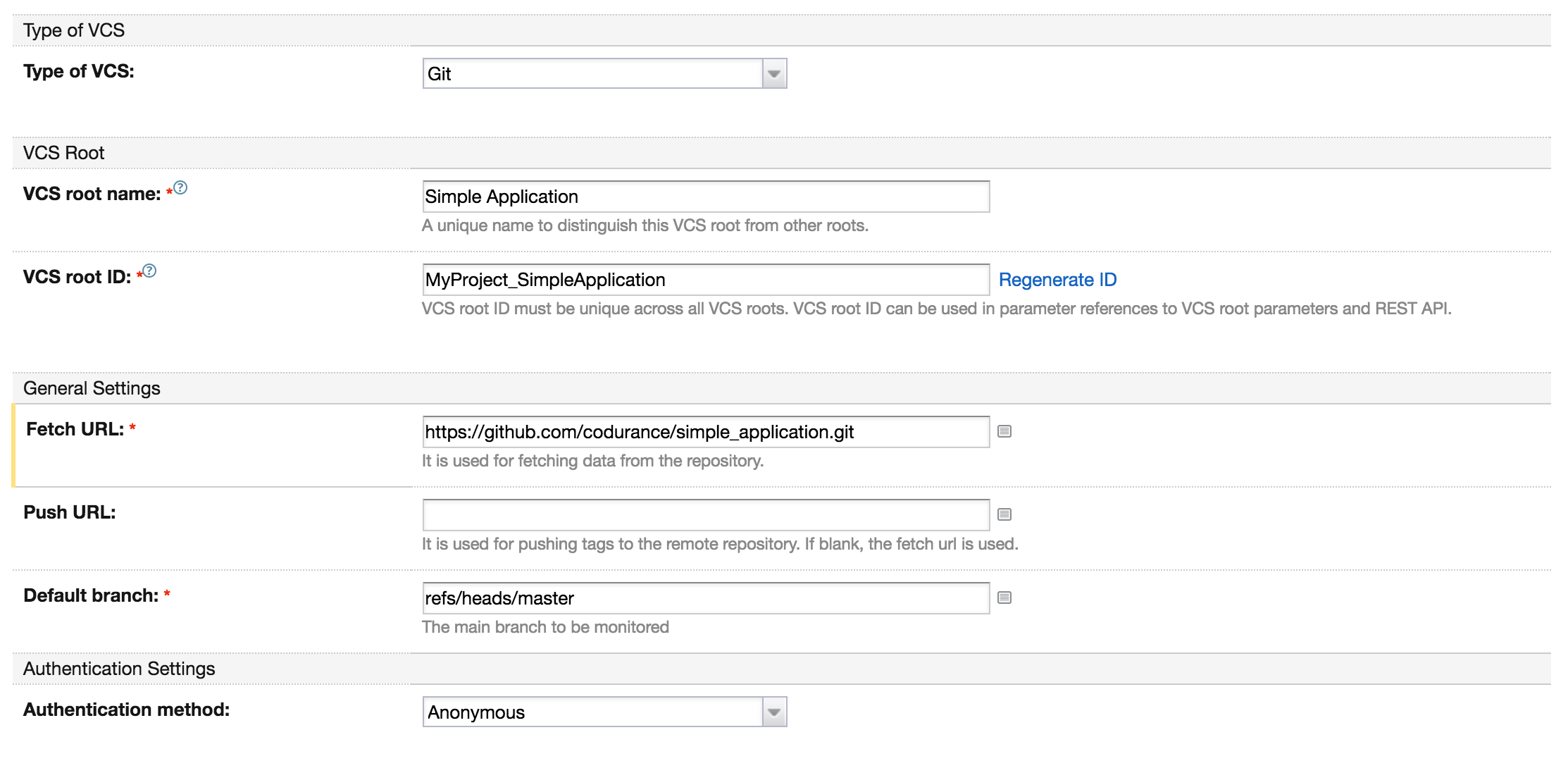

Now we are ready to instruct our build configuration how to download our source code. We use GitHub as our code repository, so we just have to choose Git as the type of our Version Control System and provide the URL to our application.

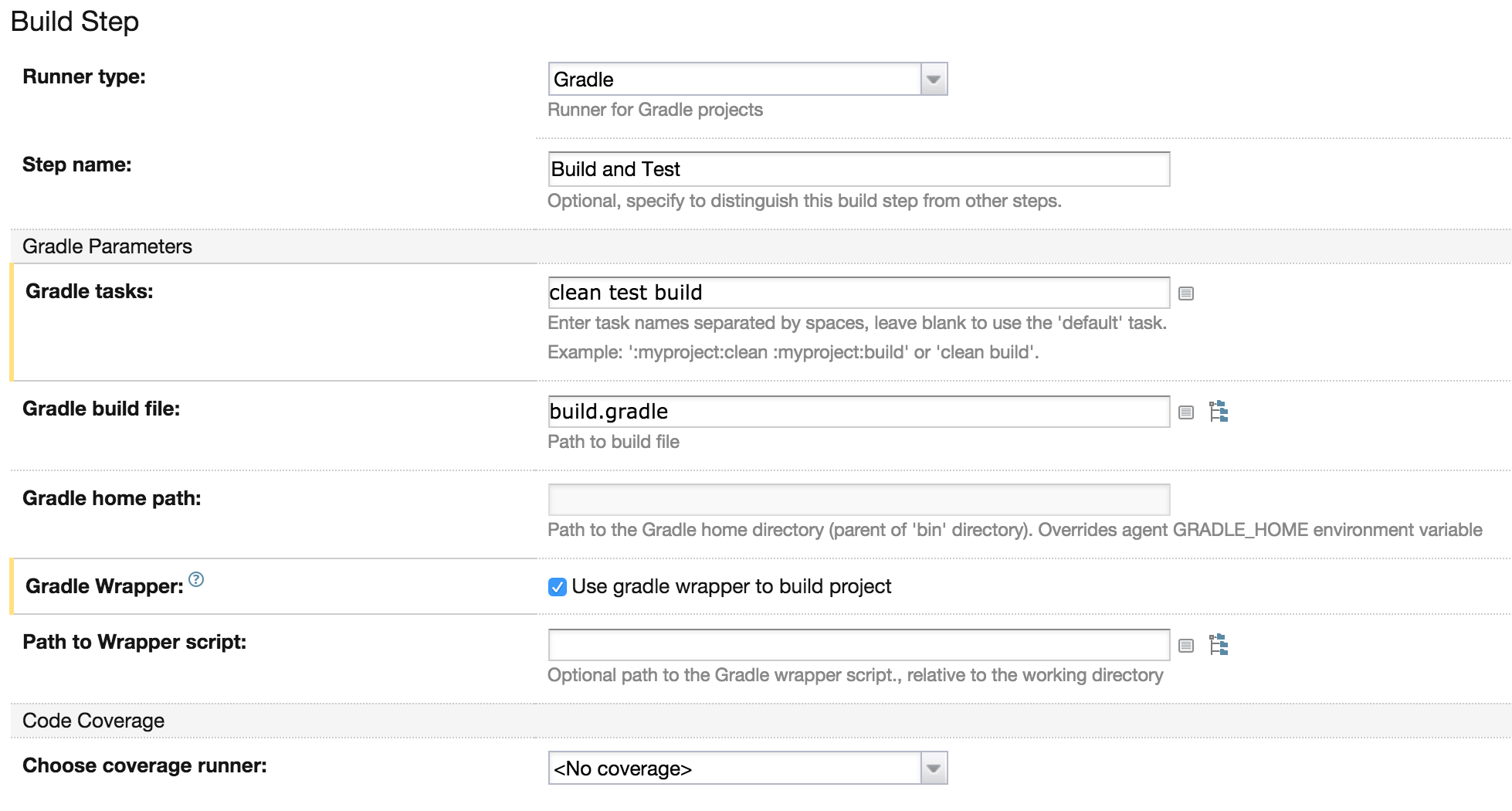

With the source code in our working directory we can describe what to do with it. As I mentioned before we run all the tests and generate an artifact containing the start script and libraries.

TeamCity has predefined runners for different build tools. In this case we are going to use the Gradle runner to test and build.

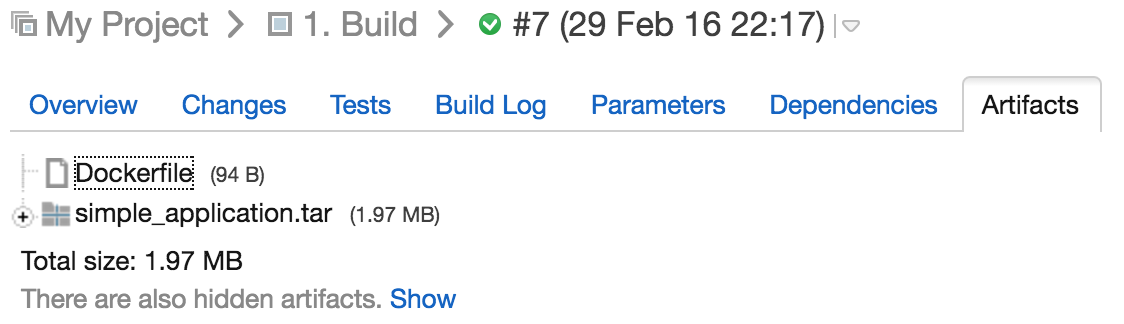

Now we can run our build and as a result we should see our artifacts in the "Artifacts" tab.

Our code is no longer needed. We have all we need to build the docker image. Now we have to create our image and release it with the right version. To simplify our example we are going to use the current build number to define an image version. Next we will generate a file with this version. This file gives us the possibility to pass information about the version to the next steps.

Let's take a closer look at the Dockerfile. We copy the content of simple_application.tar (which contains all required libraries and scripts) to the image, by using the ADD command. This command will automatically untar all files inside the image. Next we expose the port of our HTTP API and we define how to launch our application by adding an ENTRYPOINT command.

For example our Dockerfile can look like this:

FROM java:8

ADD simple_application.tar .

EXPOSE 4567

ENTRYPOINT ["/simple_application/bin/simple_application"]

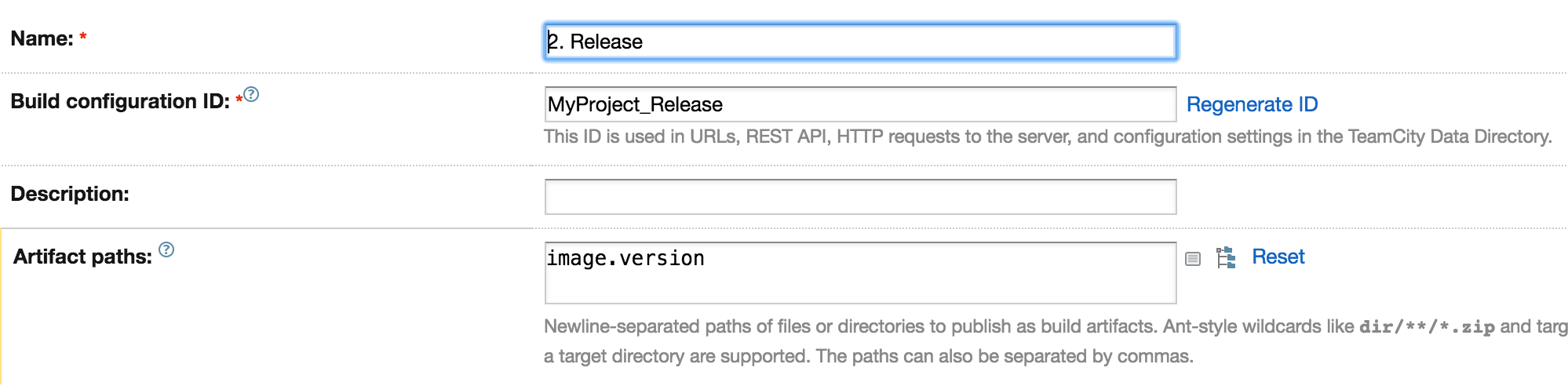

Defining the build configuration is very simple. In general settings we define a new artifact: image.version. The content of this file will be generated in the one of the build steps.

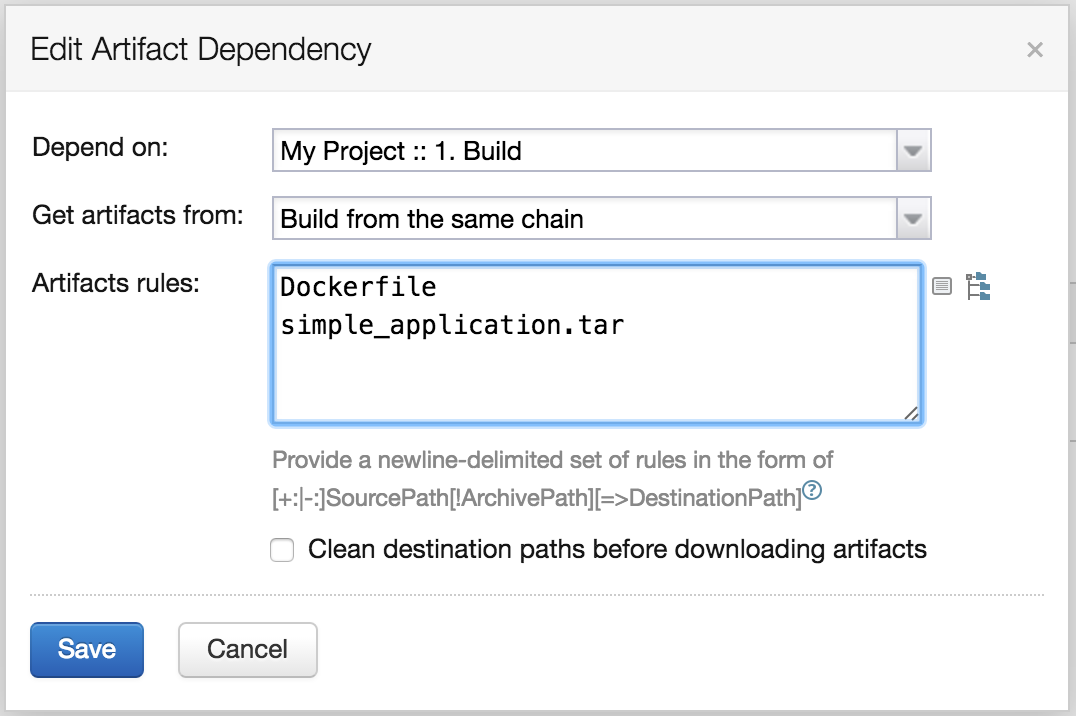

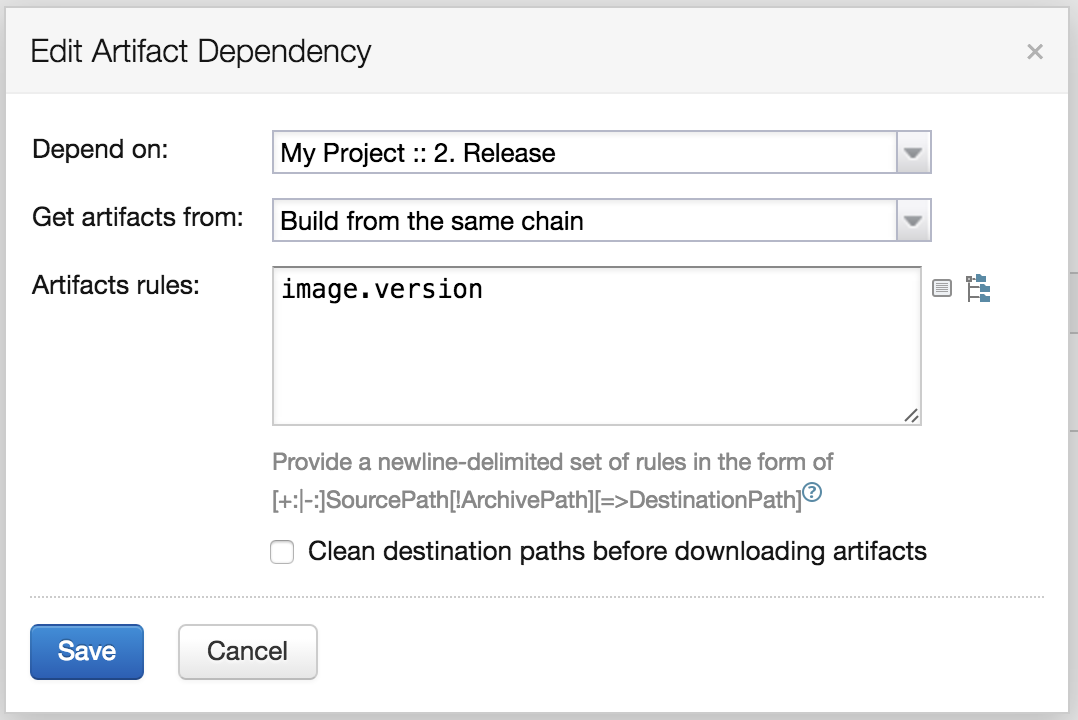

Without the artifact we won't be able to build any image. We have to tell our build how to find the artifacts generated during the Build phase. We can do that by defining an Artifact Dependency in TeamCity. We just have to choose a build configuration, define the artifacts from that build and TeamCity will add them to the working directory.

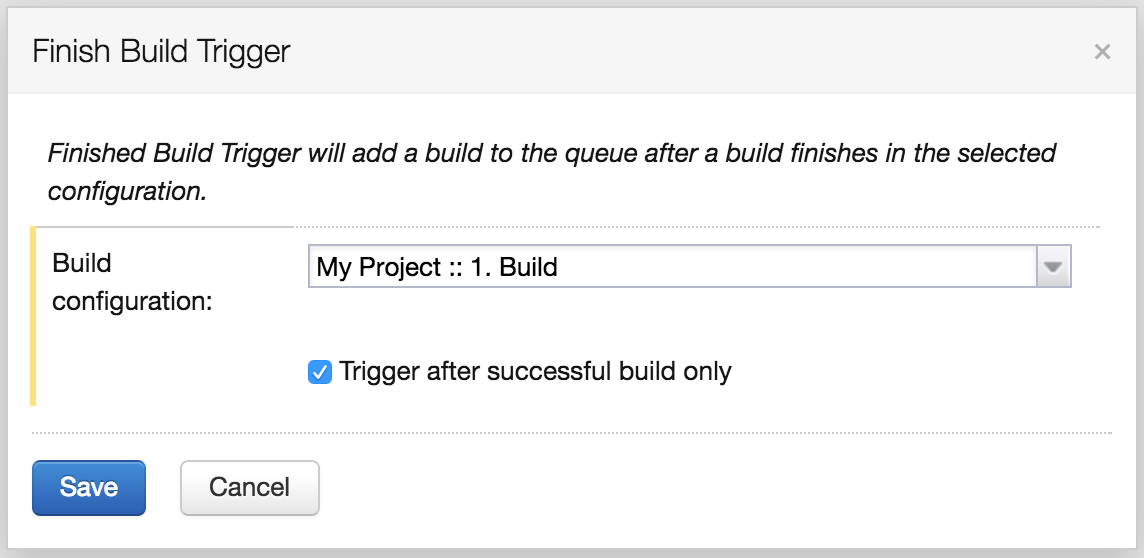

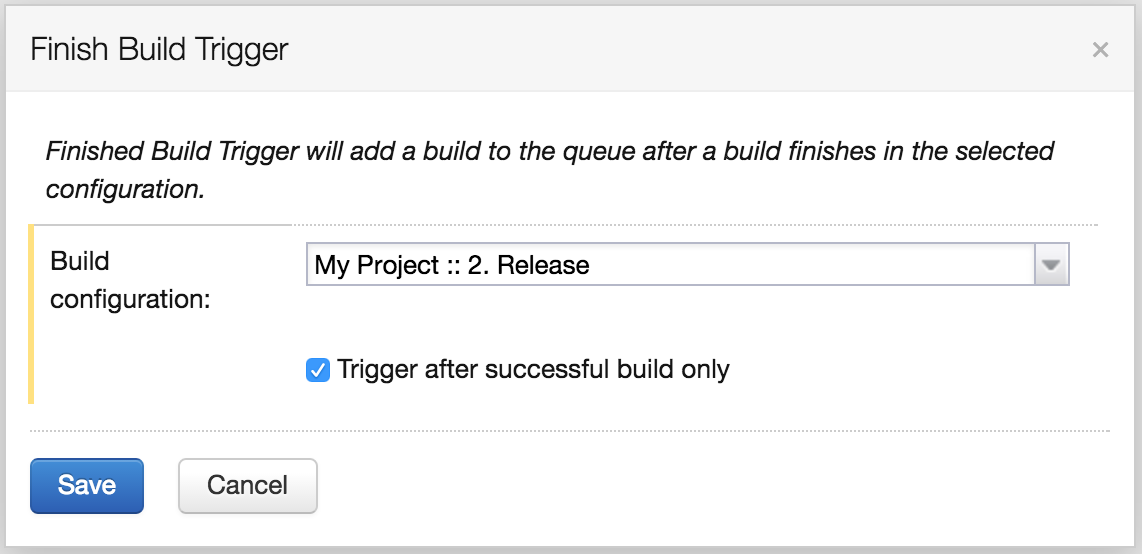

And finally we have to trigger this build automatically after all tests run by the previous step pass. By introducing Finish Build Trigger we can start this build just after TeamCity successfully finishes building the application.

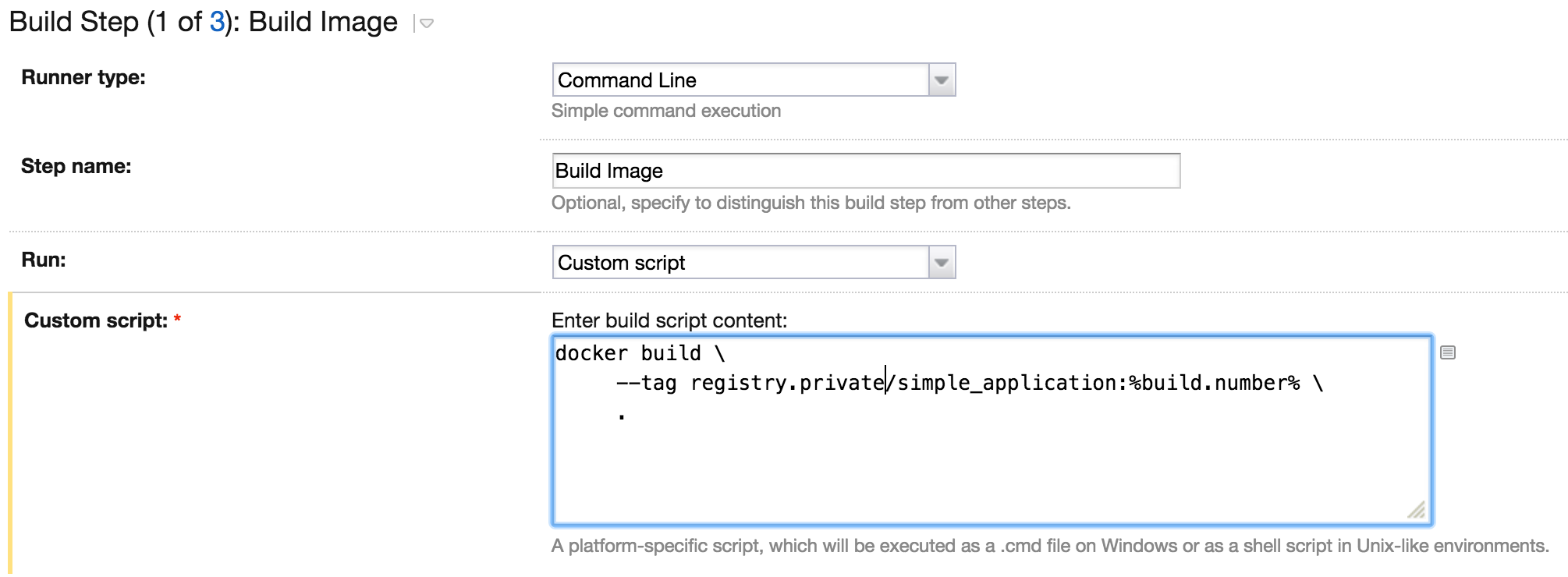

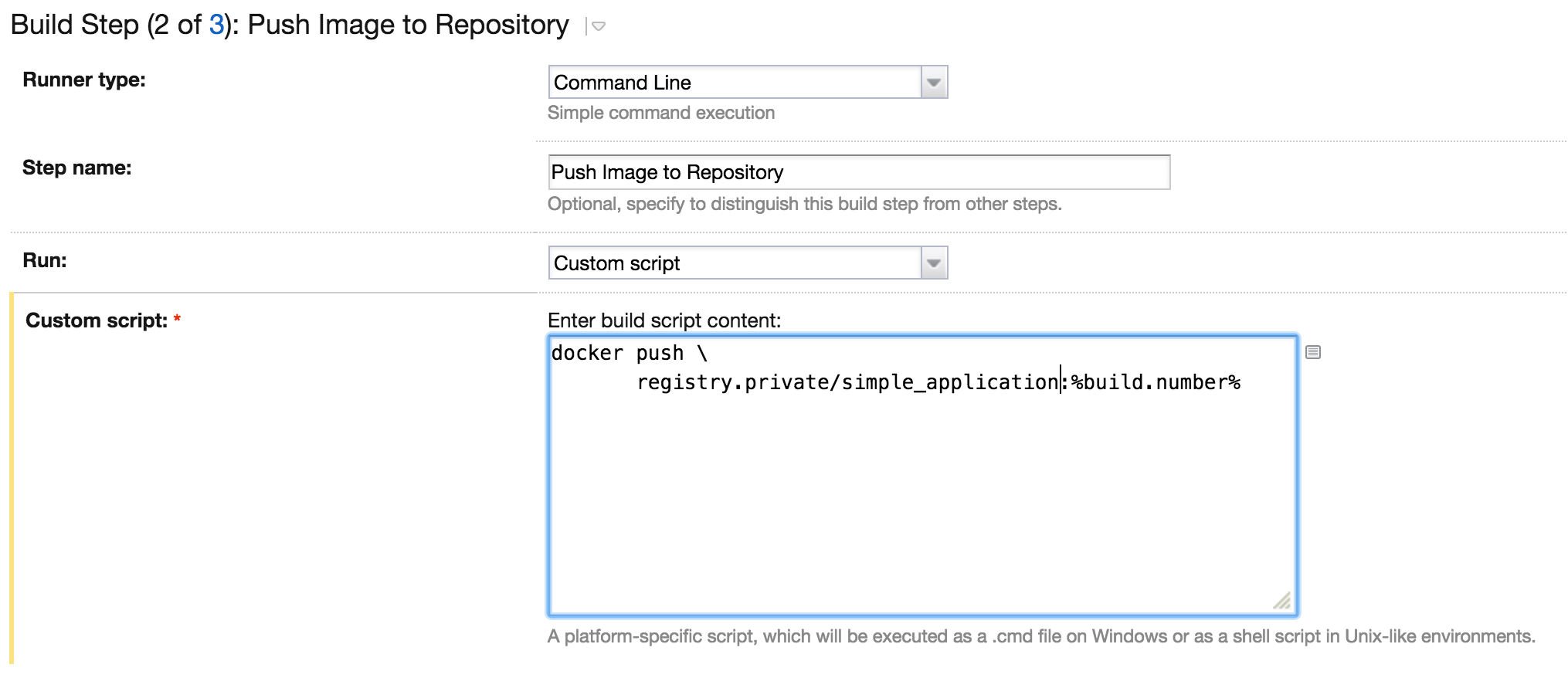

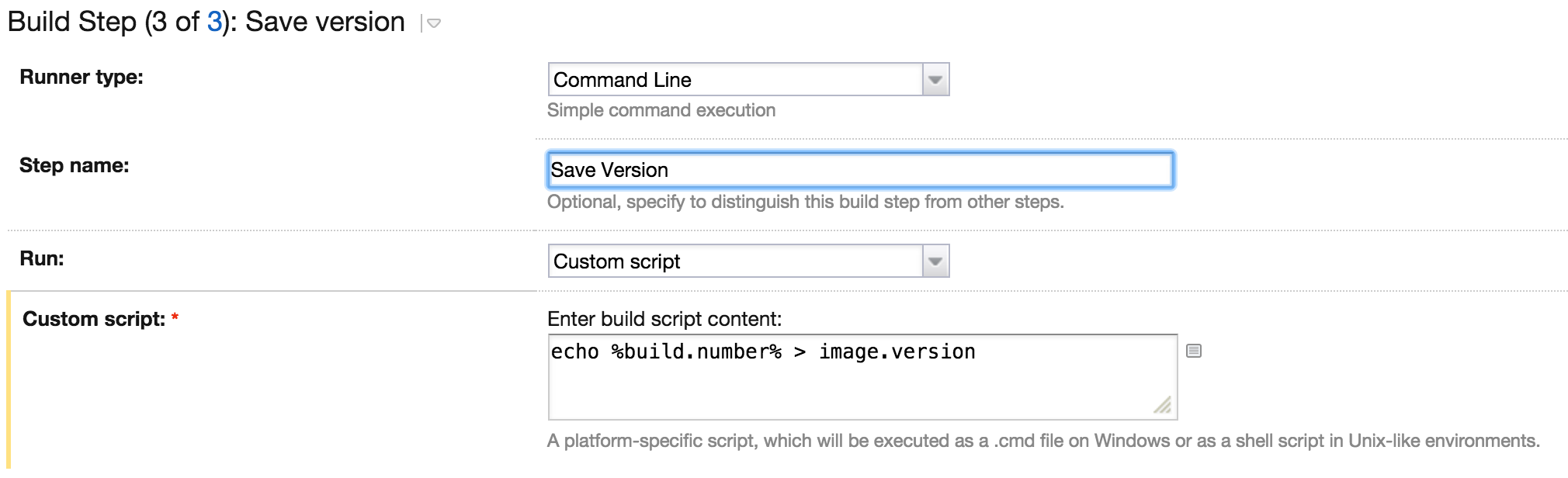

Now we are ready for a release. Three build steps must be introduced: Build Image, Push Image and Save Version. This time we will use a different runner type: Command Line. We can execute a shell script on a build agent. Because we have already installed Docker on our build agent we can use the docker command in our shell script.

To build the image we need to execute the following command:

docker build --tag registry.private/simple_application:%build.number% .

Docker's build command will take our Dockerfile and build the image tagged as registry.private/simple_application and the version %build.number%. The variable %build.number% is a built-in TeamCity variable containing the current build number.

The image created in the previous step exists only on the agent machine. To make the image available to others we need to store it in a repository. We can use Docker Hub, but in our example we use a private repository available under the address repository.private. We can execute the following command to push the image to the repository.

docker push registry.private/simple_application:%build.number%

The image is safely stored in the repository, but we need to do one more step: save the version of our image. Once again we run a shell script to generate an image.version file:

echo %build.number% > image.version

In the previous step we created an image which we can use now to deploy our application. We are going to create another build configuration: Deploy. This build will run a Docker container on the TeamCity agent based on the image from the Release phase.

Our build configuration must contain three elements. The first one is a trigger. Again, we use the Finish Build Trigger with a dependency to the Release build configuration.

The second element is a version string of our image. We can obtain this information from the artifact created by the Release build.

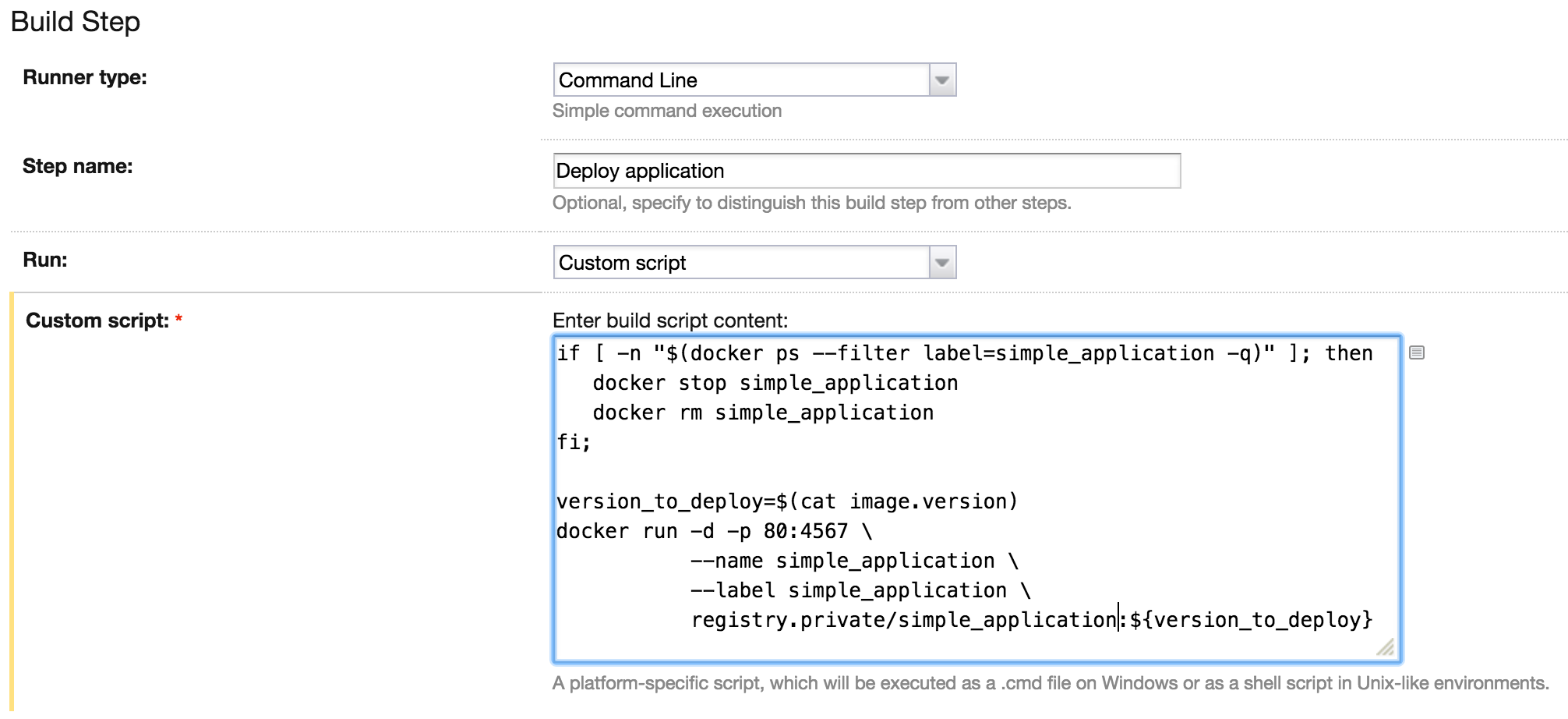

The last step is a little bit more complicated. Before we deploy the new version of the application the deployment script need to check if the container with the previous version is already deployed on the machine. If we have such a container we need to stop and remove it. Next we read the current version from the artifact and create the new container based this version.

if [ -n "$(docker ps --filter label=simple_application -q)" ]; then

docker stop simple_application

docker rm simple_application

fi;

version_to_deploy=$(cat image.version)

docker run -d -p 80:4567 \

--name simple_application \

--label simple_application \

registry.private/simple_application:${version_to_deploy}

The Continuous Deployment pipeline is ready. Now every change in the master branch of our repository will build, test, release and deploy our application.

The continuous deployment pipeline described in this post is of course simplified. Between our Release and Deploy steps we would like to do some additional tests on a production-like environment or introduce zero downtime deployments, but our approach to any deployment should remain unchanged.

Use the Docker image. You can ensure a consistent execution environment for your application on every stage of the Continuous Deployment. Now you decide how the code is executed and you cannot blame admins anymore for installing a wrong version of Java or Ruby.

Software is our passion.

We are software craftspeople. We build well-crafted software for our clients, we help developers to get better at their craft through training, coaching and mentoring, and we help companies get better at delivering software.